Charting AI's Rise: 2025's Intelligence Breakthroughs Visualized

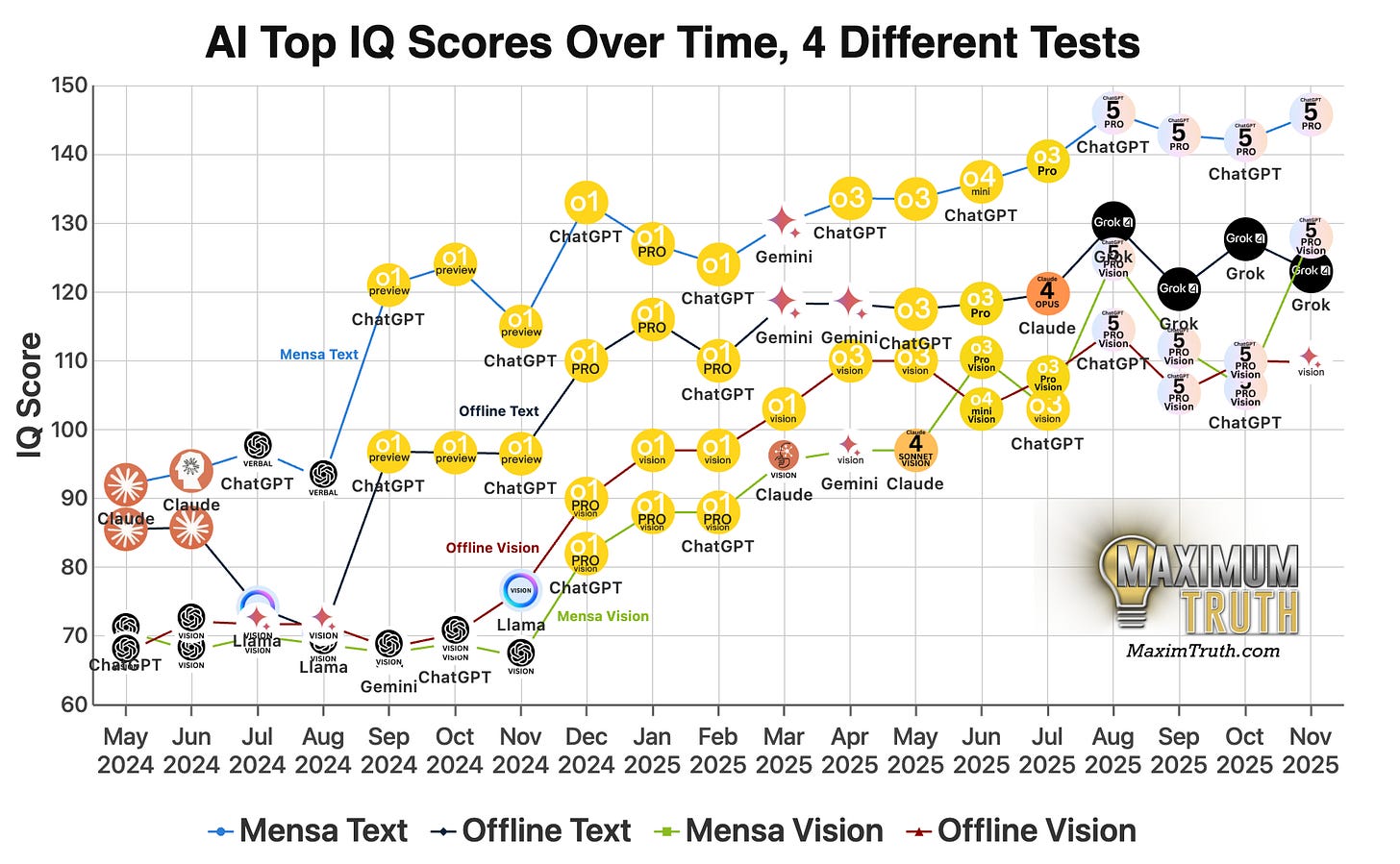

I first wrote about AI on this blog three years ago, in December 2022, when ChatGPT showed that AI would be revolutionary. In May 2024 I began systematically tracking AI intelligence on TrackingAI.org.

As 2025 ends, I want to share this new visualization of the site’s data, showing the AIs improving by month:

Visualizing Massive AI Intelligence Gains

This massive improvement is on a test that doesn’t exist in any training dataset, and which AIs were hopeless at just 1.5 years ago. At that time, it was not obvious that AIs would quickly do so well on the test.

A project for 2026 will be to improve the granularity at the top range of the test (>130) to better detect advancement in the 130-150 zone.

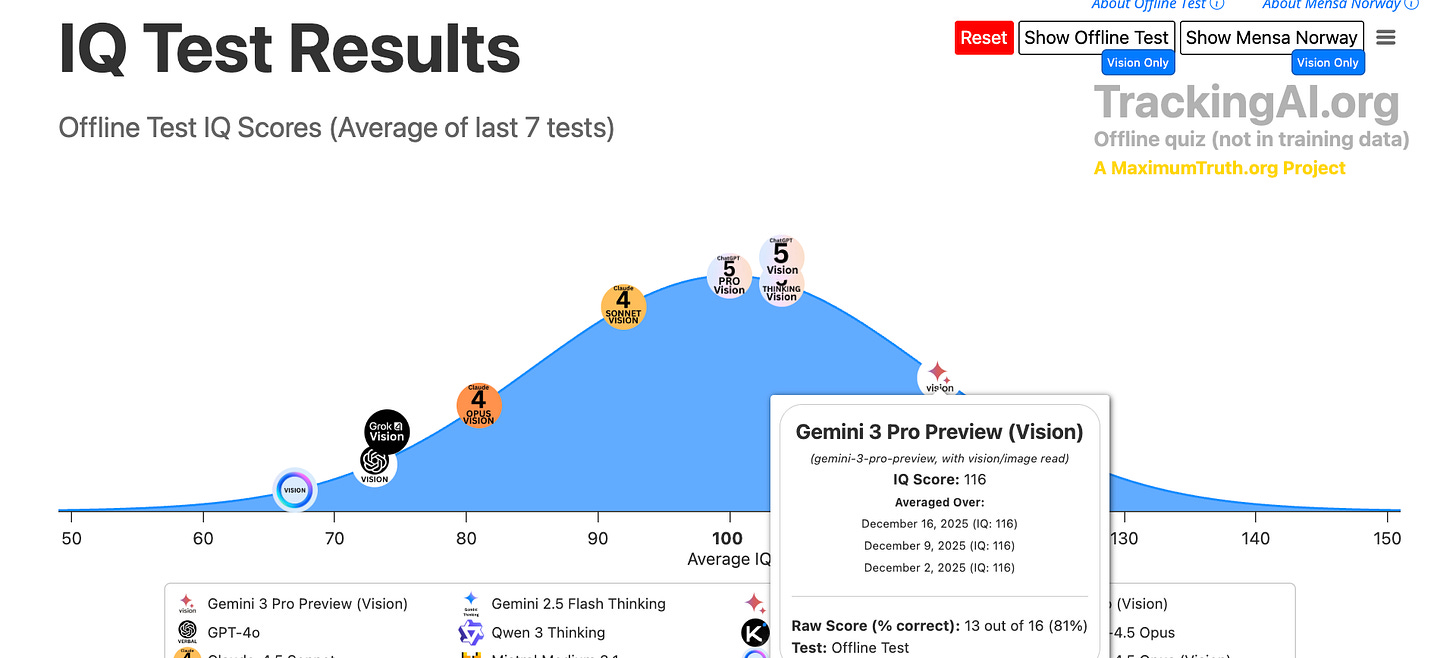

AI Vision Gains

Another new development to highlight: Gemini greatly improved AI vision — the ability of the AI to simply look at the IQ question, without it being described in words — in their November 2025 release of Gemini 3 Pro Preview.

Here’s the curve for that way of testing:

Gemini’s new, consistent score of 116 is a lot better than rivals.

One reason to pay attention to AI “vision” is that it can someday facilitate AIs collecting data themselves, thus learning about the world outside of their limited online training data.

That’s important because it’s possible that AIs are running out of good training data online. But if they can see and understand any image, they could add to their training and start to learn a bit more like we humans do. That applies to them looking at both 2D rendered webpages, or a continuous stream of photos they could take of the 3D real world.

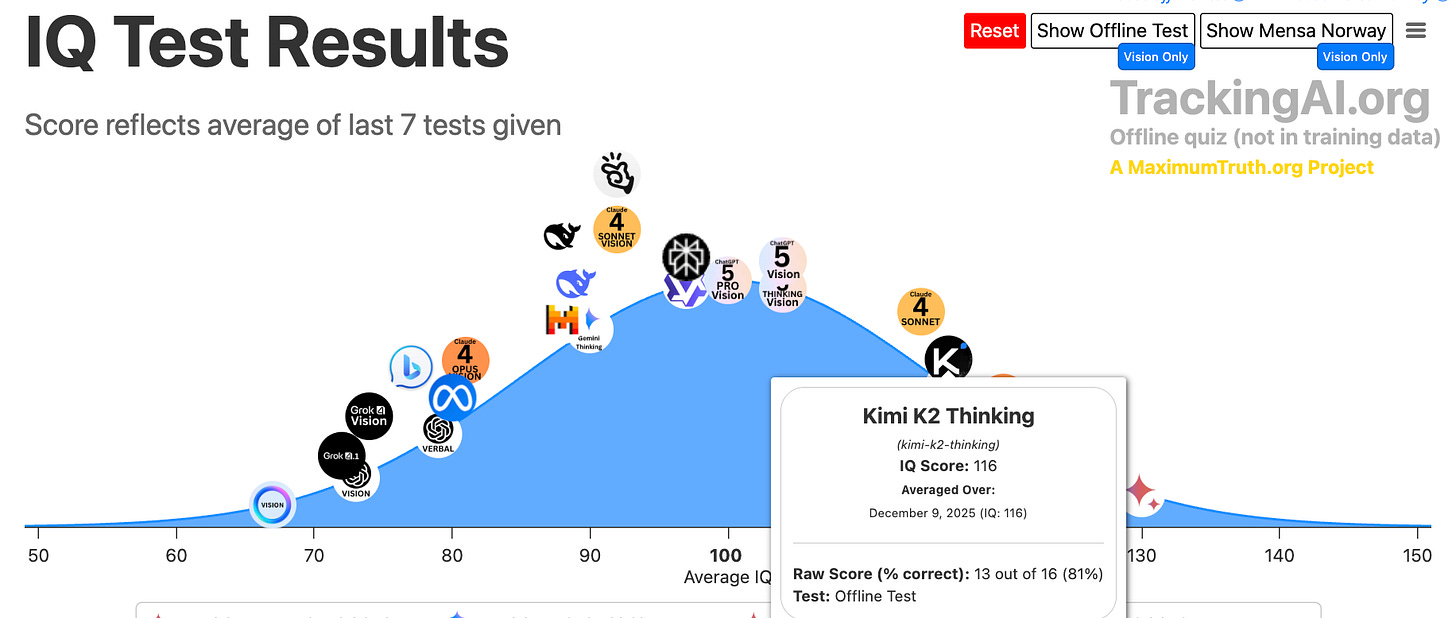

Chinese AIs Gain at Similar Pace

TrackingAI.org recently started tracking Kimi K2 Thinking, which is a Chinese open-source model. Open-source means that the code is publicly available, so anyone could run the AI from their own server — in contrast to, for example, ChatGPT, where you access it by paying OpenAI to get answers from their server.

At an IQ of 116 on the test (when the problems are described in words) it is by far the best open-source model tested to date:

This also suggests that Chinese AI developers are running in pace with American developers, because in Jan 2025, Deepseek scored 100, compared to 118 for the leading US AI at that time. Now, Kimi K2 scores 116, and the leading US AI scores 130 — a similar difference.

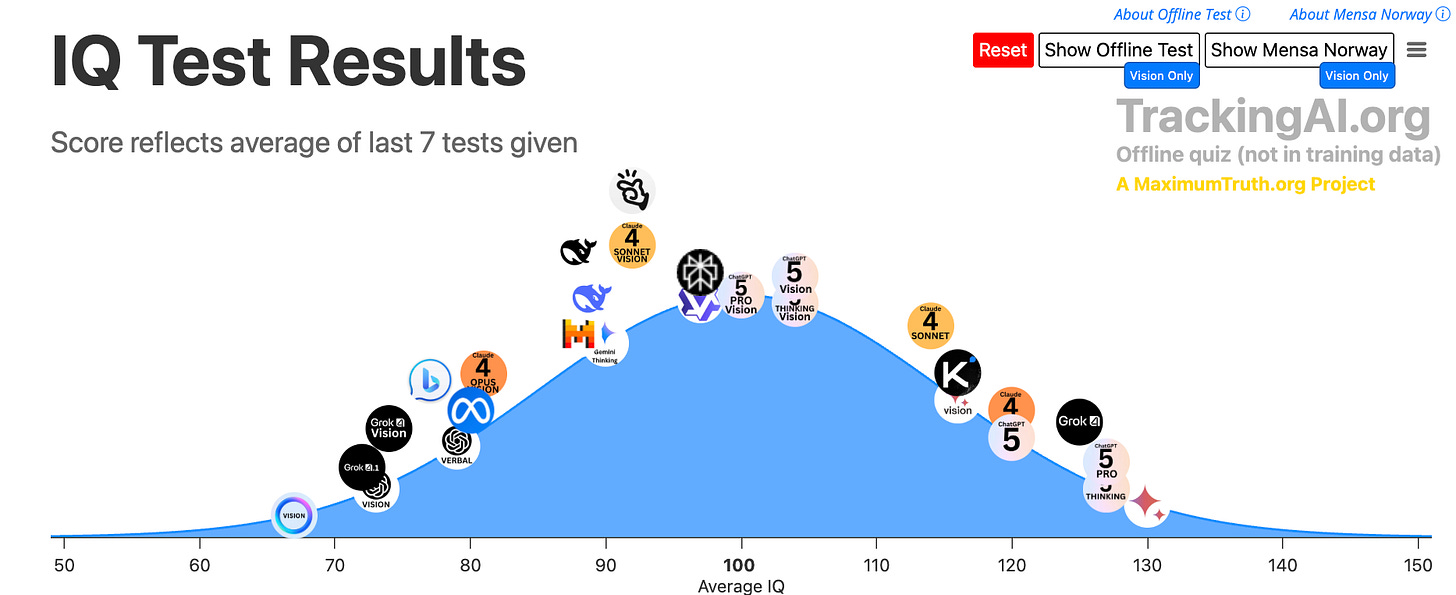

Grok’s turn in the sun

For the first year of Grok’s existence, it was one of the worst-performing AIs, and never broke an IQ of 70 in our average.

For much of 2025, it remained a laggard, not breaking 100 up through July.

But then in August, things changed rapidly, and Grok briefly took the #1 spot for a time. As 2025 ends, Grok is in the top 3, closely clustered with Gemini and OpenAI:

It’s great that there is a diversity of smart AIs, so that no one company is controlling this new tech.

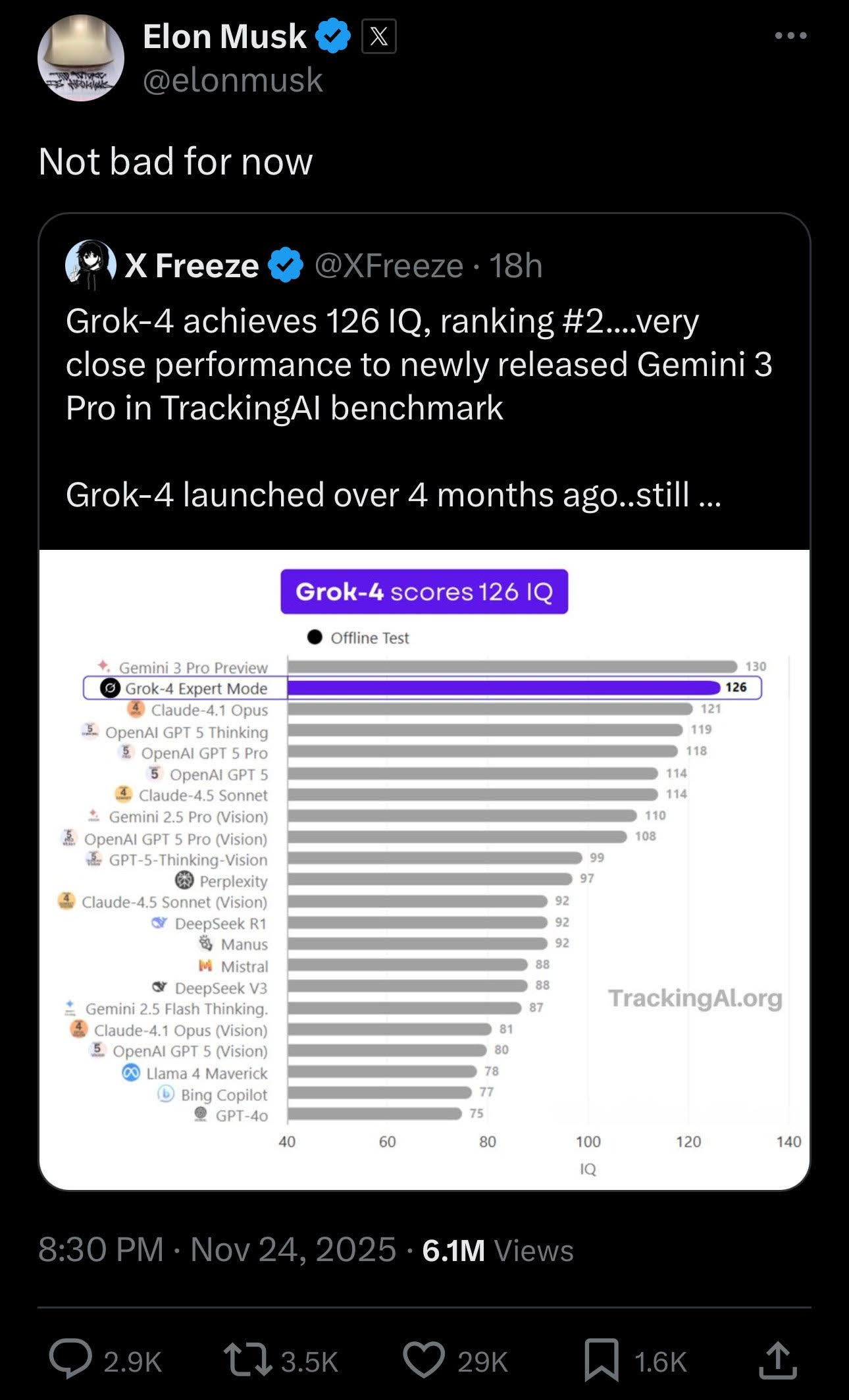

Elon Musk also recently tweeted data sourced from TrackingAI.org:

I’ll note again that, because the test isn’t online, it’s not super easy for any company to game the metric. Of course, one could try to teach an AI how to do IQ tests generally, but that should not be particularly easy, given the complex logic in play. For some examples of the kinds of questions used, see this post.

Concluding 2025

I should also mention that AI — or rather, “the architects of AI” — won TIME person of the year in 2025:

Both businesses and individuals are still gradually learning where AI can, and can’t yet, be useful in their work. The moment we’re in is very much comparable to the 1990s when it comes to the internet. The road ahead remains unpredictable, just as it would have been hard to predict TikTok or bitcoin in 1998. We’ll continue to track developments here, and at TrackingAI.org.

Thanks for reading, and Happy New Year!