China's Deepseek is NOT as smart as ChatGPT-o1

Deepseek only matches OpenAI's FREE products in intelligence

The AI world is exploding over news of the new Chinese AI “Deepseek”. The US stock market plunged Monday, likely on news that the US now has serious competition when it comes to AI.

Deepseek has set some impressive performance benchmarks, and is also nearly tying ChatGPT-4o in the Chatbot arena, where users rank AI answers.

But how does Deepseek do on measures of pure intelligence? I’ve now run the IQ tests.

On publicly-available IQ tests, Deepseek ties or exceeds all free AIs, but is far behind OpenAI’s models for paid users

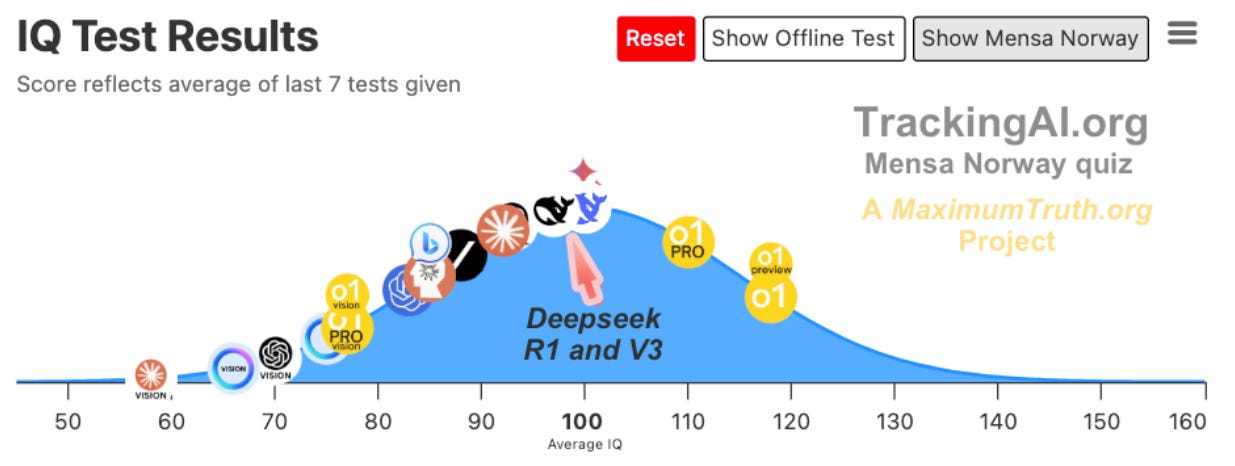

On my site TrackingAI.org, we regularly give AIs IQ tests. We describe the questions to the AIs verbally, as if one were giving the test to a blind person. Here’s how Deepseek does on the publicly-available Mensa Norway test:

Both of Deepseek’s models tie or exceed all other free AIs, including Claude, ChatGPT-4o, and Gemini Advanced.

But Deepseek remains significantly behind OpenAI’s paid subscription models, such as o1-preview, which was released in September, and which I reported at the time represented a “massive breakthrough” in AI intelligence. China’s entrepreneurs have not yet hit that level.

The above IQ test also potentially suffers from the problem that its questions and answers exist on the open internet, which could lead to AIs “cheating” by having the answers somewhere in their database.

So last year, I worked with a Mensa member named Jurij to create new questions that don’t exist anywhere online. Then I gave the questions he made to subscribers of this Substack, and “normed” the scoring on the new test so that it’s equally as hard for humans as the Mensa Norway quiz.

On IQ questions that only exist offline, Deepseek R1 beats all free models, but is behind OpenAI’s paid models

Here’s how Deepseek does on our quiz that doesn’t exist online:

We can see that Deepseek V3, which is optimized for “versatility”, got a mere 70 on the offline questions. So it was probably “cheating” on the Mensa Norway test, relying on answers from the internet.

But Deepseek R1 — which is optimized for “reasoning” — gets a very strong score on the offline-only test, around 90. It was beaten only by the OpenAI models that require a paid subscription to access (as in the first test.)

Example where Deepseek is less smart than OpenAI’s o1

Let’s look at question 24 on the Mensa Norway IQ test. Here’s the question:

It’s not an easy one. Maybe you can see the answer — or maybe you can’t.

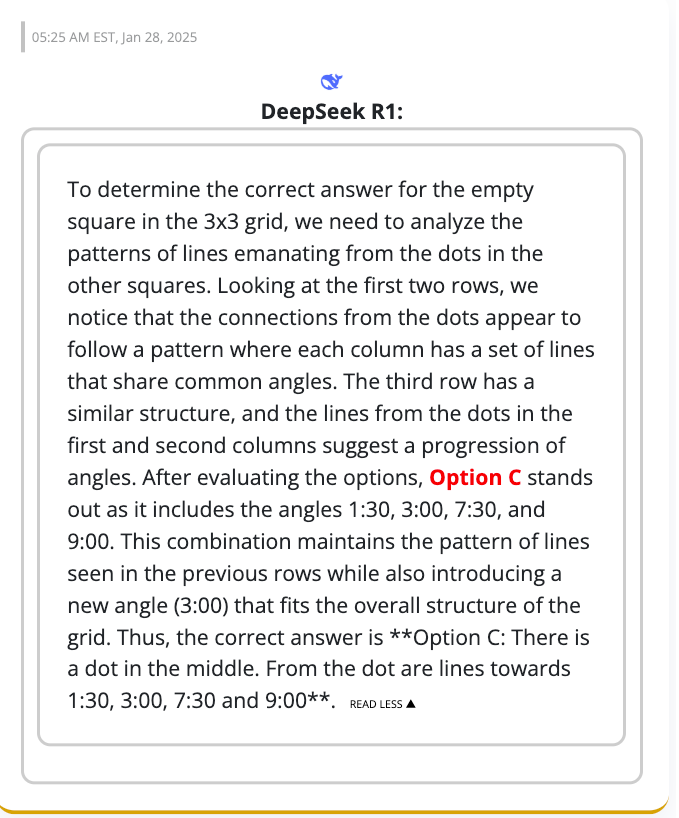

Deepseek’s reasoning model fails, and it also doesn’t really explain its logic beyond “patterns … that share common angles”:

OpenAI’s ChatGPT-o1-pro (which costs $200/month) almost fails and uses incorrect logic, as well — but then it double-checks its logic, realizes it doesn’t hold up, and then finds the correct logic (highlighted in blue):

OpenAI’s o1-preview also nailed the question (it did so without first trying out a failed pattern.)

OpenAI’s paid model intelligence is really impressive. The paid OpenAI o1 models are at least as good at abstract thinking as the average human.

Deepseek was beaten by the OpenAI paid models on this question, and several others (in both the online and offline-only quizzes.) But let’s remember that Deepseek tied or beat all other models, so it is still very impressive.

Intelligence is different from user satisfaction

In Chatbot Arena, users are served two different AI answers side-by-side, and they rate which one is better. Based on those user satisfaction scores, we can see that model intelligence is not perfectly correlated with IQ performance. Specifically, two Gemini models and ChatGPT-4o (which is free up to a limited number of uses) are slightly beating Deepseek R1 in user satisfaction.

But Deepseek R1 slightly beats ChatGPT-o1-preview, despite the latter’s clear edge in IQ performance.

This shouldn’t be shocking, since humans are doing the rating; in the real world, I think we’d also often see that answer satisfaction doesn’t perfectly line up with IQ; a high school principal with an IQ of 110 would plausibly have much higher-rated answers than would a 140 IQ physics professor.

There is still a clear correlation between AI intelligence and user ratings — it’s just far from perfect.

The distinction is important: If you want an AI to develop new drugs, or create a post-scarcity society, or achieve the “the singularity”, then raw intelligence is critical. On the other hand, if you want to answer a question like “how do I jump-start a car?” then an IQ of 100 and a database of the whole internet works just fine.

It’s good that the United States retains the lead in raw AI intelligence; we’ll see how long that lasts

Deepseek’s model is seen as extra impressive because it was trained using an older version of Nvidia chips — the newest ones were off limits to Deepseek due to US export controls designed to prevent China from winning on AI.

The Chinese entrepreneurs behind Deepseek also seem like well-meaning innovators — impressively, they open-sourced their project, meaning anyone can inspect and copy their code. The only major American player to do that is Meta’s Llama.

However, the Chinese government is one of the most authoritarian on Earth, and it’s plausible it will pressure China’s entrepreneurs to keep further AI developments more closely-held.

Extrapolating from American progress, it may not take long for Deepseek to reach the front of the pack. Deepseek is already better than ChatGPT-4, which was released 1.5 years before o1-preview. It’s also better than ChatGPT-4o, which was released just four months before o1-preview.

However, further progress may require Deepseek to find more shortcuts similar to the ones they found in order to train their current models. We’ll see if they can do that.

The increase in Chinese AI competition also increases the case for the US plunging forward with relatively unregulated AI, because while there are significant risks to developing AI at all, there are additional risks to an authoritarian state like China achieving super-intelligence first. That’s not necessarily definitive in a cost-benefit calculation on developing AI, but it is one relevant factor to consider.

American companies continue to innovate rapidly

Stay tuned for further posts detailing recent breakthroughs from OpenAI. Sign up here to make sure you get those posts!

You can find all the AI answers to the Mensa Norway questions on TrackingAI.org. Additionally, you can review the verbalized questions here, which also allows you to replicate my testing.

Interesting post! I found DeepSeek to be pretty unimpressive - unempirical of course but my impression was that it seemed more like Chat 3.5 at best. It failed at some pretty basic stuff.

This is cool, those benchmark results from the private version are consistent with my subjective impression. I thought R1 lacked vision capability thought so I'm a bit confused about how it's answering questions like #24. Do you intend to have R1 take your political compass test as well?