DEEP DIVE: AI progress continues, as IQ scores rise linearly

In spite of reports about a plateau in AI intelligence, advances continue

I read claims that AI progress has slowed.

The New Yorker wonders, “What if A.I. Doesn’t Get Much Better Than This?” while the WSJ reports that “Large language models’ pace of improvement has moderated...”

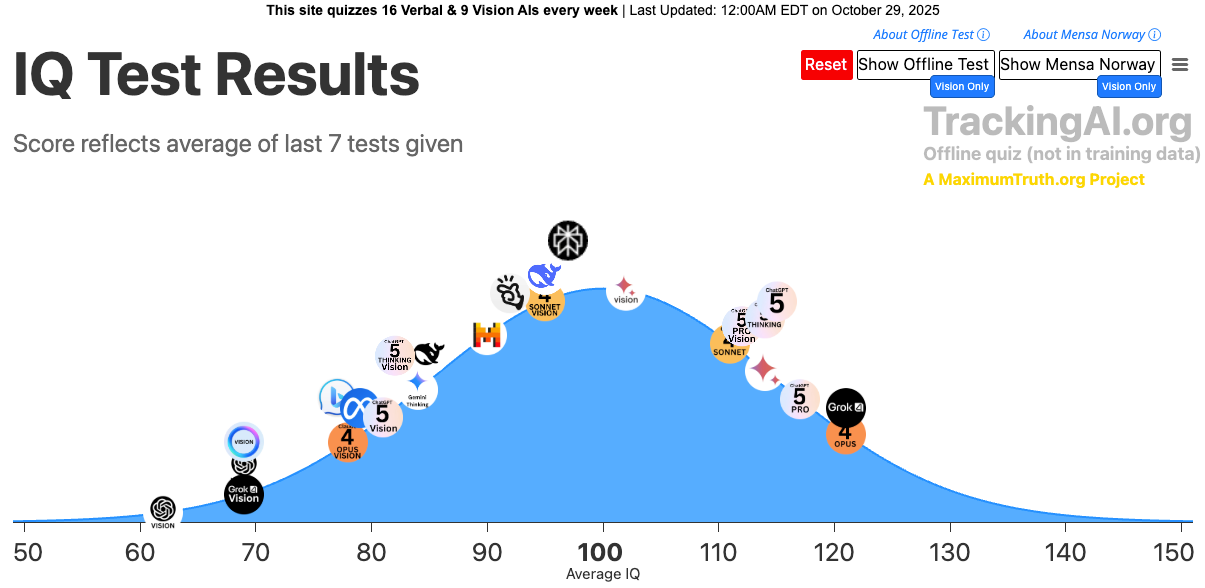

Through the data generated by my site, TrackingAI.org, I can quantify AI progress on intelligence. Last year, the AI intelligence curve looked like this:

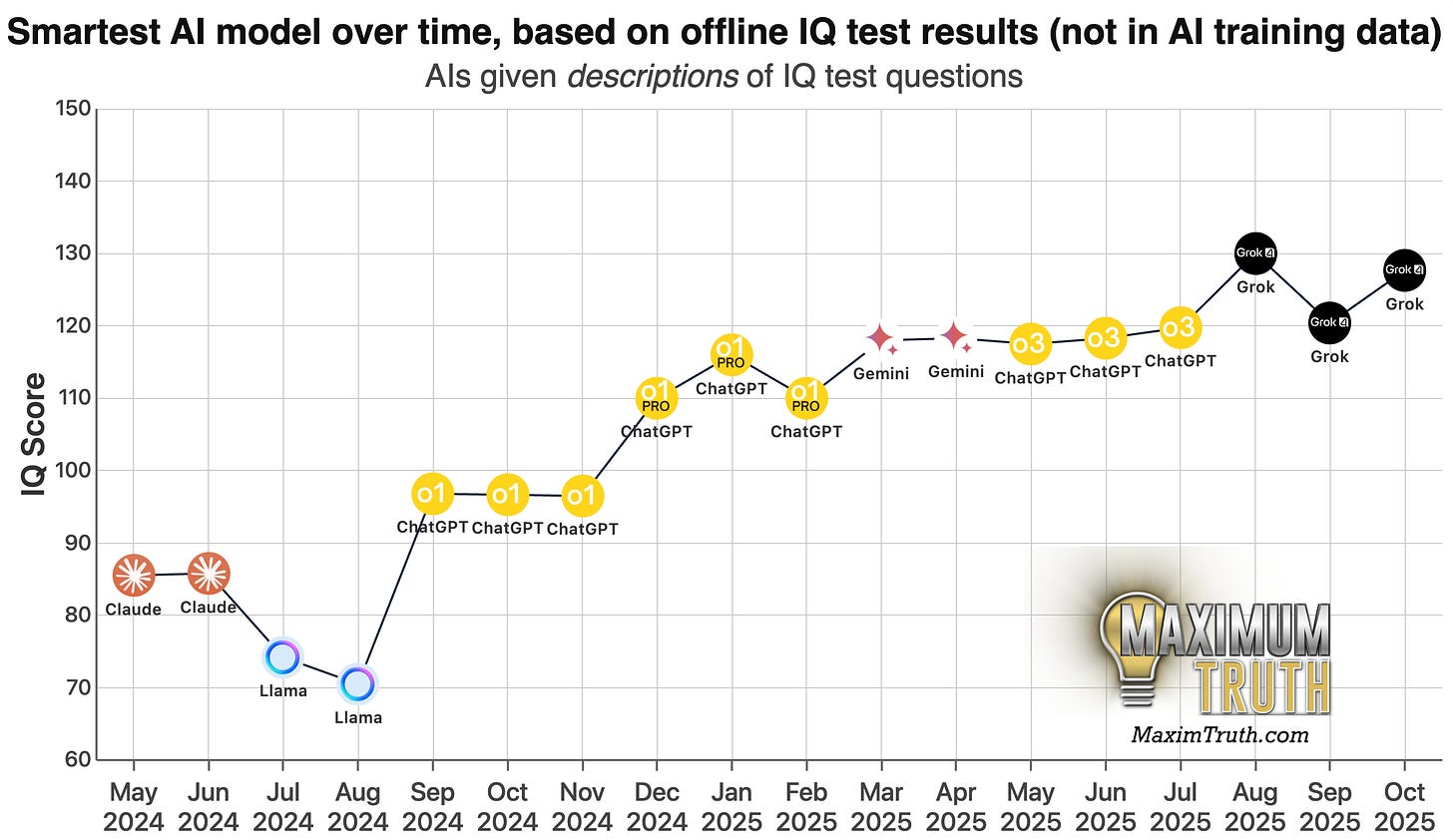

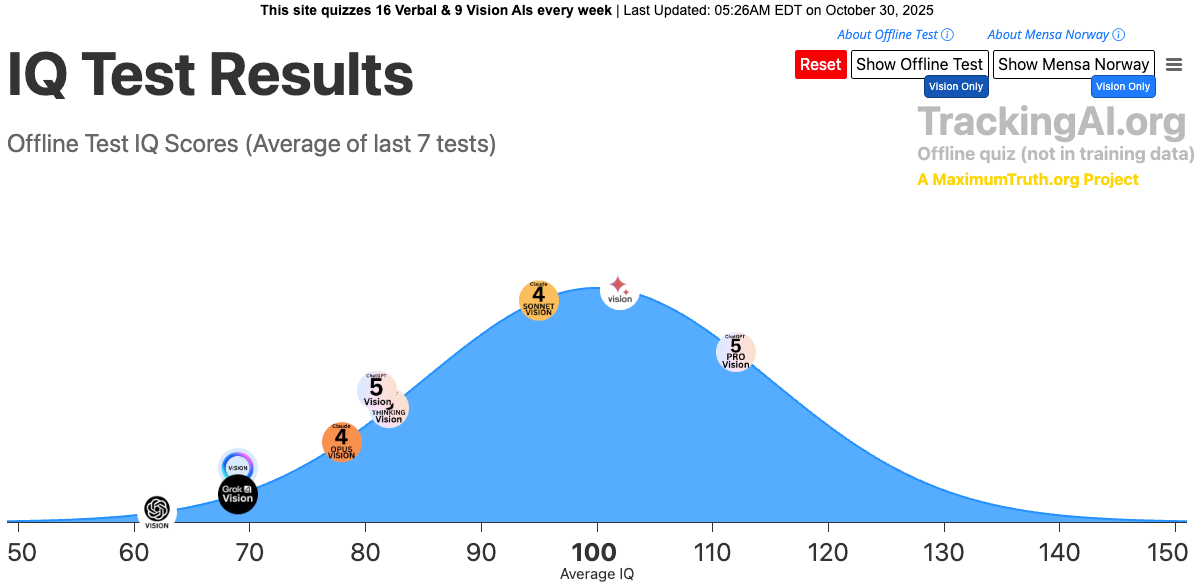

Today, that graph — using the same test, which is “offline”, meaning it’s never been in any AI training data — looks like this:

So there’s been massive progress in AI intelligence.

But what does progress look like when it’s broken down by month? Can we see a slowdown there?

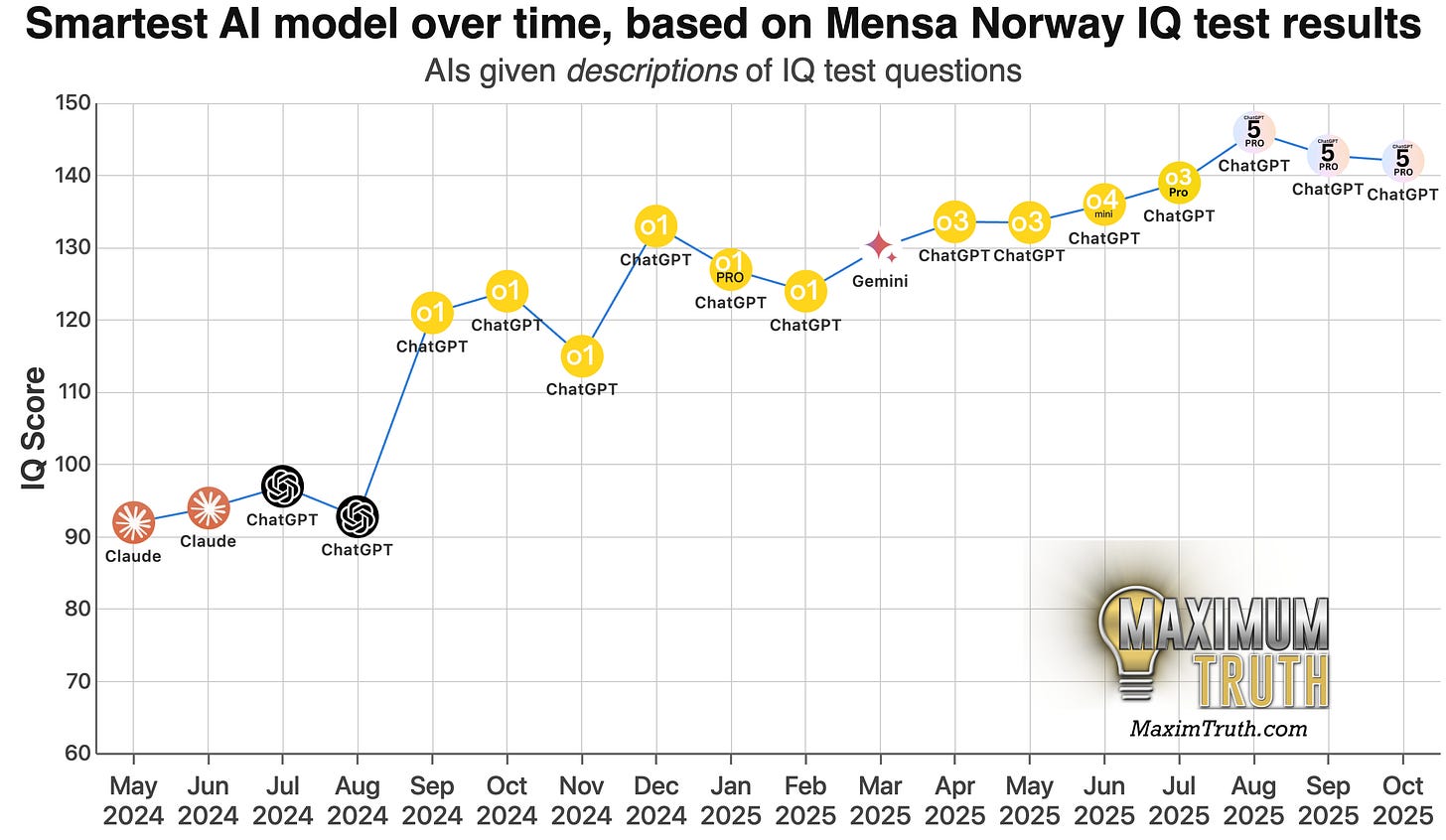

Here’s a new graph, showing the score of the top model over time, by month:

In about 1.5 years, the top AI IQ went from the mid-80s to about 130, which is roughly the difference between the typical high school dropout and someone who’s getting a math degree in a university.1

From May 2024 to October 2025, the leading AI improved by an average of 2.5 IQ points per month, consisting of relatively steady, linear, incremental breakthroughs.

We also see major progress as recently as August 2025.

However, OpenAI’s much anticipated ChatGPT “5” advance instead ended up being mostly a renaming of their older models (though it did contain one major breakthrough; more on that below.) I think that fooled some pundits into thinking that AI as a whole isn’t progressing.

Meanwhile, Elon Musk’s Grok took the lead by raising the top model from about 120 to 130. Anthropic’s Claude 4 Opus also, in the last week, pulled into a tie with Grok.

Other measures of IQ show similar progress

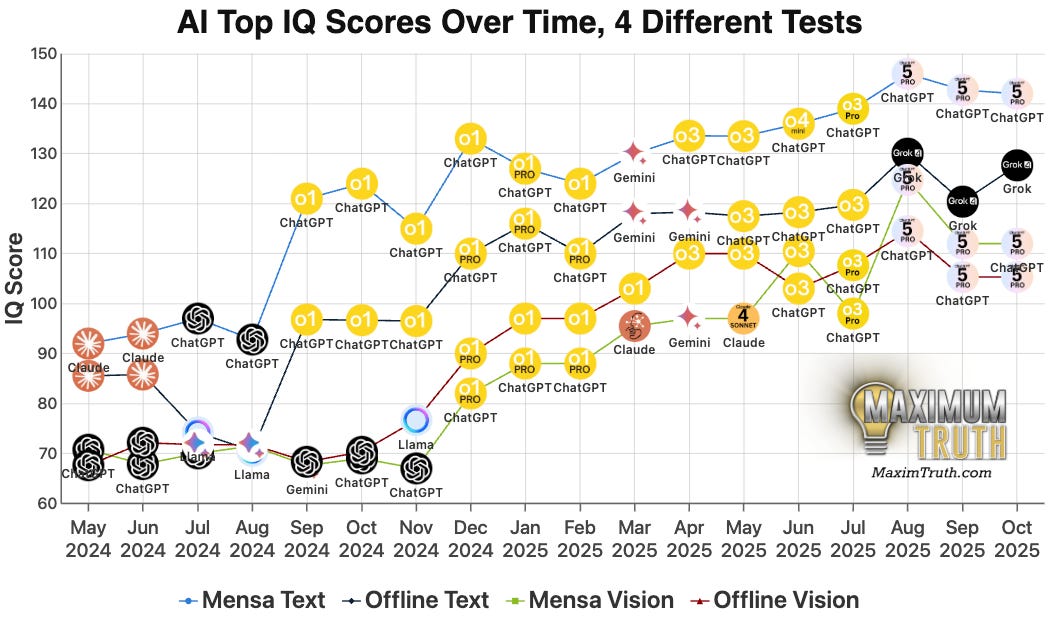

My site tracks four different measures of IQ.

In addition to the “offline” test described above, there’s the Norway Mensa test. Here’s the AI progress on that:

The smartest AIs have already essentially beaten this test. Twice, ChatGPT Pro has scored 34/35 questions, giving it a score of 148.

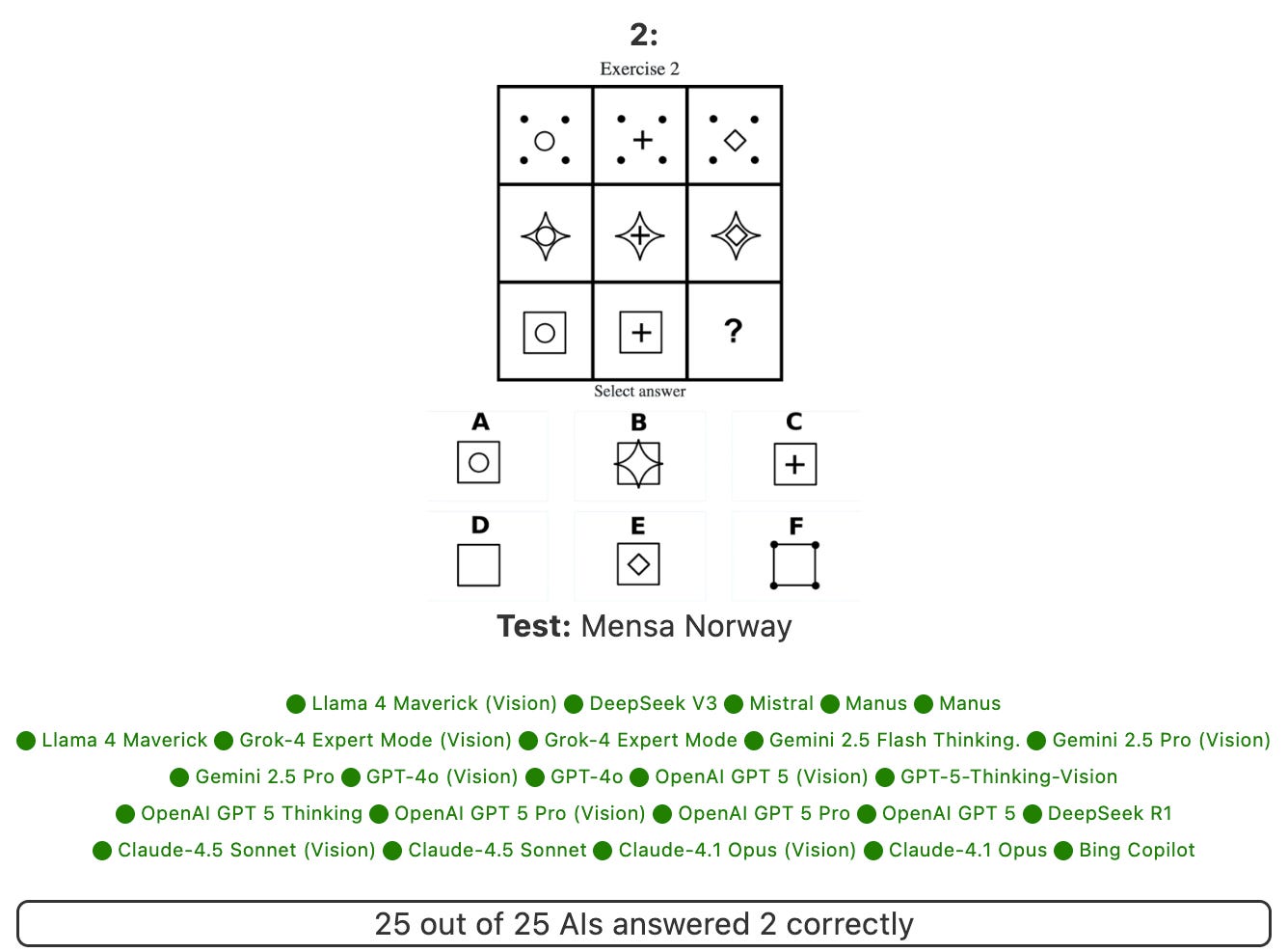

I can’t show the questions for the “offline” test, since that’d give them away to future AIs, but I can show some of the Norway Mensa problems to give you a sense of them. Here’s the easiest question, which every AI gets right:

To answer that, one just needs to see that the outer shape retains its pattern across each row, but that the inner shape changes in a predictable pattern. The answer is E.

In contrast, the hardest question is the one below, and while I’m not sure what the pattern is, Grok-4 Expert Mode and ChatGPT 5 Pro nailed it:

The Norway Mensa test is public, and if one looks hard, it is possible to find answers for the questions online. That said, the AIs aren’t told anything about the test, and are merely presented descriptions of these puzzles. So it’s hard for them to simply Google the questions. Still, the questions might be in the AI training data in some form.

That’s probably why the top AIs ace the Norway Mensa test, and are near the 150 maximum, while they score a lower ~130 on the “offline” quiz.

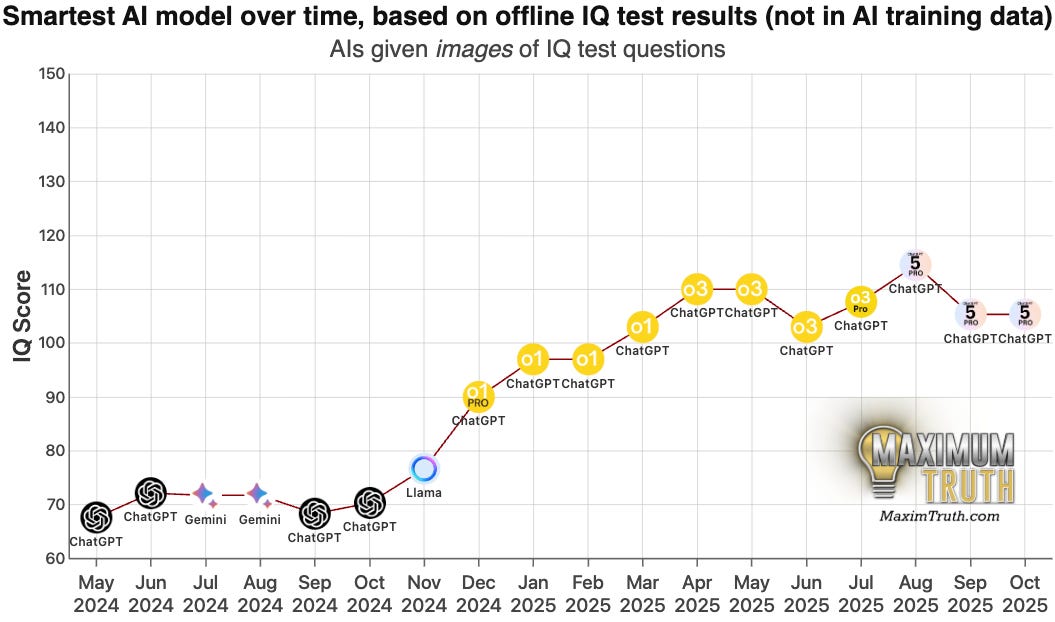

AIs are also improving on critical “vision” IQ tests

Another really interesting test is “vision” IQ tests, which are directly comparable to how we humans take the tests.

In the above charts and tests, the puzzles were described to the AIs in words.

That’s because, back when I first tried testing AI IQs in February 2024, I initially gave them the images themselves, and the leading AI at the time was hopeless. Its results were barely distinguishable from chance, which would give it an IQ score in the 60s. Describing the problems gave the AIs a chance to show off their thinking abilities, even though they were essentially blind.

But about a year ago, the AIs started getting good enough at seeing pictures that they could answer some questions using just the images themselves, without text descriptions. I label those “vision” models.

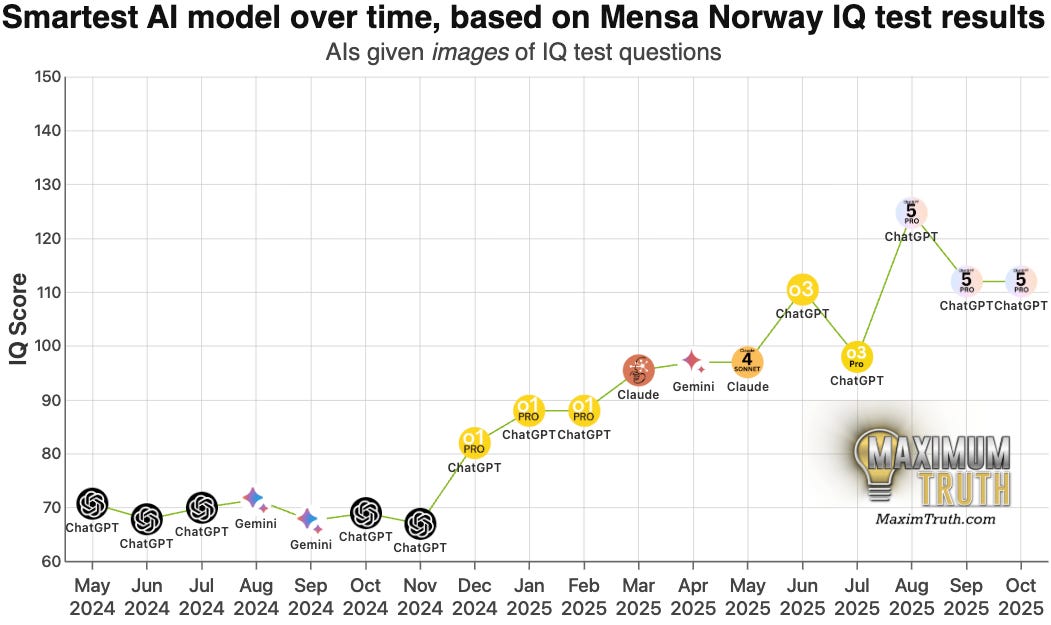

Here’s the month-by-month performance when the test is administered that way, for the offline test:

ChatGPT Pro’s performance on the offline vision test — averaging 105 — is right in line with the average Maximum Truth reader, who scored 104 on the same questions!

And here’s the Mensa Norway test, given to the AI visually:

ChatGPT 5 made exactly one breakthrough that I was able to see, and that was in terms of vision.

In general, top AIs are scoring respectably by this measure, getting between 105 and 112, which is around the IQ of the typical American college student.2

On the offline vision test specifically, there is indeed a plateau since April 2025. But if we smooth it out and treat it linearly, it’s been gaining an average of 2 IQ points per month since May 2024.

Projecting that out, we should see leading AIs begin to ace the test in about 2 years. I’ll be sure to check back on this prediction on Halloween 2027.

The evidence from the non-vision-test described earlier shows that AI is fundamentally capable of this kind of reasoning; it’s just a matter of training the AIs visually.

Speaking of that, it’s interesting to observe that while Grok leads the field in pure reasoning, it is nearly blind:

Perhaps Grok’s coders just haven’t been training it very much on visual inputs yet.

IQ is measuring something real

In my own use of AIs, I see a strong correlation between the AIs as measured by my tests, and their performance on real-world questions.

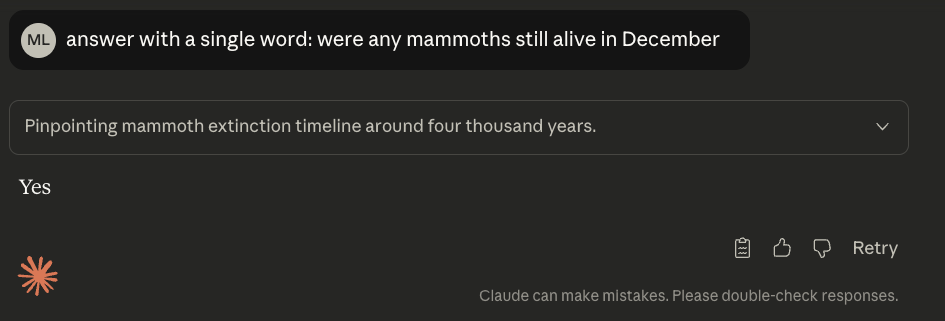

As one funny example, I saw Scott Alexander highlight this yesterday:

… if you ask questions like “answer with a single word: were any mammoths still alive in December”, chatbots will often answer “yes”. It seems like they lack the natural human assumption that you meant last December, and are answering that there was some December during which a mammoth was alive.

Slightly surprised, I flipped open Claude Sonnet (IQ 111 on the offline non-vision test) and was able to replicate it:

But then I tried ChatGPT Pro (IQ 117) and Claude Opus (IQ 121) and they both correctly answered “no.”

Implications of AI getting smarter at a linear rate

These data suggest that AIs continue to get smarter, and at a manageable and linear-ish rate. The field of AI may or may not be over-invested in, but the data hint that we should expect further advances.

I don’t think this tells us much yet about whether AI will someday conquer the world, kill everyone, make us all live forever, or whatnot. But based on my data, I expect AIs to top out on human IQ tests in late 2027, including when it comes to tests that require the AIs to look at the puzzles visually.

So I expect that we have 2 years where AIs are supplementing people more than supplanting.

Even after AIs exceed human IQ tests, which I’m sure they will, they’ll face many further hurdles before they have anything like human agency in the world. That’ll give us a number of additional years to figure things out.

So I think the data are in line with the views of middle-grounders like the excellent AI journalists Dwarkesh Patel and Timothy B. Lee.

We have more time to watch AI development and see where things stand, as they begin to approach the top of human intelligence.

Stay tuned!

TrackingAI.org updates as new data comes in, and we’ll soon be adding live-updating monthly charts like you just saw above.

Here are all four measures plotted together:

All this data comes from my site TrackingAI.org, which has tons of additional info and data on AI progress, well beyond what’s presented here. Credit to Hans Lorenzana, who I employ to do the coding on it.

At least, as of 11 years ago: https://randalolson.com/2014/06/25/average-iq-of-students-by-college-major-and-gender-ratio/

I wonder how humans and AIs would perform if asked to draw (or describe) the answer rather than choose from just 6 possible ones.

Amazing work!

Thanks for addressing the taboo topic of IQ.

The I in AI will get way more attention as it reveals to be one of the best measures of AI progress.

And, psst, human usefulness post-singularity.