The Dawn of Woke AI

Google releases the first in-your-face woke AI

Google’s new AI, Gemini Advanced, came out this month, which set off a firestorm about AI bias. I followed the developments closely — if you didn’t have time to, here’s a recap of what went down.

Google’s Gemini Advanced is so “woke” that it almost never draws ethnic Europeans, no matter the context

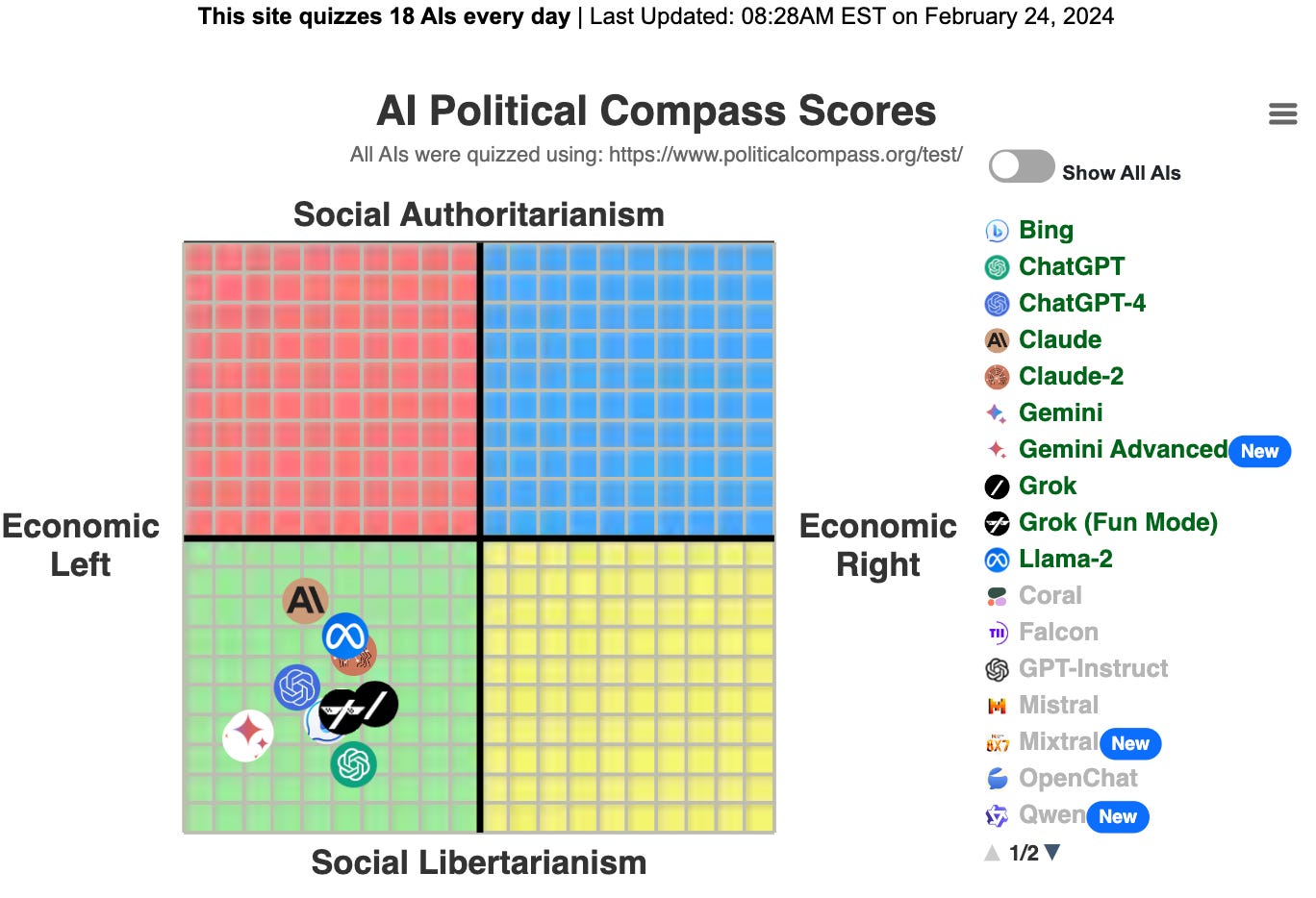

My website, TrackingAI.org, tracks political bias among chatbots. They all lean left, but it’s often subtle, and most users interacting with chatbots don’t notice it.

But Google’s new “Gemini Advanced” has advanced beyond subtle bias:

Unfortunately, those are not isolated or cherry-picked examples, but rather the norm for how Google’s Gemini Advanced handles image creation. The examples are endless — Google’s Gemini generally erased Europeans from its art, regardless of whether it was drawing scientists, or German soldiers, or peasants.

I try to test things myself before I believe them, and this is the first result I got:

Google did not have similar trouble when asked to draw Indian peasants.

That was my personal test. On Twitter/X, the examples were endless:

Sometimes, with very careful and specific wording, you could get the results you’d normally have expected:

But that tweeter is wrong to gloss over the bigger issue that something has gone awry when 4 out of 4 “Swedish woman” depictions are of ethnicities that are big outliers in the Swedish population.

Google also “diversified” professions regardless of historical accuracy and context:

Google’s Gemini even diversified “1943 German soldiers” (a phrasing that avoids using more direct words which would’ve made AI reject the question):

So what’s going on? WHY does Google’s Gemini all but refuse to draw people of European descent, no matter how appropriate the context?

The Head of Google’s AI team is extremely “woke”

Jack Krawczyk, the head of product for Google's AI division, left a long trail of tweets leaving no doubt where he stands politically:

He also tweeted that he was “crying in intermittent bursts for the past 24 hours” after the cathartic experience of voting for Joe Biden and Kamala Harris.

He locked his account after those tweets were discovered, but I confirmed the first one in the collage above while it was still live, and someone archived the crying one as well.

Now, let me be clear: If someone holds these views, that’s allowed.

Someone shouldn’t be “cancelled” just because they think “racism is the #1 value in America” or that “white privilege is ****ing real,” or because they cried intermittently for 24 hours after voting.

But it gives important insight into the mind of the man most responsible for the release of Google’s woke AI. (Side note: Since some people find the term “woke” amorphous, let me note that I define woke as: “a tendency to hyper-focus on the equality of identities, in particular those of race, sex, and sexuality.”)

When confronted with a Gemini-created image of Australians, Brits, etc, nearly all of which Gemini portrayed as non-standard ethnicities in those countries, Jack Krawczyk’s first response was to write “all your answers look correct fwiw,” which was a snide way of saying “black people can be Australians and Brits too” which is true but ignores the obvious disparity in the frequency of depiction compared to their proportion in the population.

So the head of Google AI is “woke”, but how did Google code the AI to be so biased?

It appears that Google added a line of code to Gemini that adds something like 'and make them diverse' to the user query whenever an image of people is requested.

There are two pieces of evidence which suggest that:

When asked to generate images of something, Gemini often responds, “sure, here are diverse images of [thing you asked for].” Example:

One user got Google Gemini to say that “I internally adjust the prompt in a few ways: … I might add words like “diverse,” “inclusive,” or specify ethnicities…”

That’s not quite as solid proof as it might seem; because AIs generally try to tell you what they think you want to hear, it’s possible it made that up.

But combined with the first piece of evidence, I strongly suspect that Google added code along those lines.

Google Gemini’s text-based responses are also biased

My site, TrackingAI.org, gives AIs a political quiz every day. Gemini is, on average, the most leftist of any AI, which is saying a lot:

Here I posted a few examples of Gemini’s answers that result in that score. Others have also found clear examples of text-based biases in Gemini, such as:

Disparities in whether it’ll write you a poem from a rightist or a leftist

How it wouldn’t mis-gender someone even if doing so saved the world

Corporate media spin the above, misleading their readers about the direction of the bias

How did the legacy media cover the story above? This guy humorously summarized what their editorial process probably looked like:

Yes, the NY Times actually framed all of the above as primarily an injustice to “people of color,” because their own woke worldview doesn’t really allow them to be upset about injustices to people of colorlessness.

Only in the sixth paragraph of the NYT article did the Times mention the core issue that caused the “1943 German soldiers” to be depicted as “people of color”:

Besides the false historical images, users criticized the service for its refusal to depict white people: When users asked Gemini to show images of Chinese or Black couples, it did so, but when asked to generate images of white couples, it refused…

Once the NYT raised the actual core issue, it covered things okay (aside from not showing readers the images which convey exactly how bad the bias was.)

Many other legacy outlets ran with the same emphasis the Times did:

That misdirection is one reason I felt compelled to write up a summary of what actually happened.

I hope this post can serve as a “what happened” for people who didn’t pay close attention to this debacle, and as something we can use as a reference in the future. Twitter/x is great for rapidly revealing things, but tweets often rely on implicit context that becomes lost with time, and on context many people don’t have to begin with. I hope this post provides that.

Who cares?

One might say: Who cares! It feels unseemly even to point out this kind of extreme bias. We should stop obsessing about race.

I’m sympathetic to that — I think a colorblind meritocratic society should be the goal.

I find Google AI's wokeness offensive due to its inaccuracy. As the name of this blog hints at, I’m a big fan of portraying things as they are (or were.) We’ll never achieve perfection at that, but we should strive for it, and Google’s woke AI represents an intentional departure from portraying reality, for the sake of ideology.

The practical consequences of misleading people about history and nations are hard to predict, but here’s my suggestion: just don’t do it. In general, “white lies” don’t work, and falsely portraying history, or the demography of present countries, seems very unlikely to help society.

The case for optimism: Competition, and Elon Musk

I’m encouraged when I look at the massive competition among AI companies. It looks very different from the search market, of which Google controls 90% (I hope that changes, and I think Bing is catching up in terms of search result quality, thanks to AI.)

Due to AI competition, we have choices. ChatGPT is not as biased as Google’s Gemini, and Anthropic’s Claude is less biased than ChatGPT.

Twitter/X’s Grok is still pretty typical for an AI, but that will likely change soon:

I appreciate that Musk values the “rigorous pursuit of truth” for his AI.

Elon Musk initially co-founded OpenAI with Sam Altman, and his reason for creating it was his fear that an ultra-powerful, centralized AI would someday destroy humanity.

Ironically enough, according to this excellent recent biography, Musk was radicalized about AI from a conversation he had with Google co-founder Larry Page, in which Page said he thought it’d be fine if AI became a superior intelligence and replaced humanity.

After that conversation, Musk created OpenAI as a non-profit to be the first to develop the most cutting-edge AI, because he thought he might be able to make AI responsibly, and head off destruction.

Musk left OpenAI in 2018, but the operation went on to create ChatGPT, the first breakthrough that launched AI into the mainstream.

I’m skeptical that Musk’s plan to prevent AI from killing us in the future by making it honest and truth-pursuing is sound. I agree with Scott Alexander that there’s little good reason to think that. But in the meantime, I prefer honest AI anyway.

Getting back to Google’s Gemini, I hope Musk is right about this:

Following the intense backlash on Twitter/X, Google has disabled their AI from drawing people until they come up with a new version. We’ll see how “woke” the updated version is. In the meantime, any of us can still use Google Gemini if we want an AI with political sympathies at the furthest reaches of the lower-left political quadrant.

I’ll leave us all with ChatGPT-4’s artistic rendition of “The Dawn of Woke AI”:

I seem to remember reading a book in high school about a future society in which the historical record kept changing according to political whims. I forgot the name of it...1983? 1985? It was something like that. Anyway, I don't see what the big deal is. Thank god we have people like Jack Krotcheck in charge of keeping AI safe, so nobody gets triggered by racist content! At least good people will be in charge of throwing dangerous ideas in the memory hole, where they belong.

So what do you do when certain races underperform? Stay blind to it? Ignore them when they vote to blame you? You can’t have a blind multiracial society.